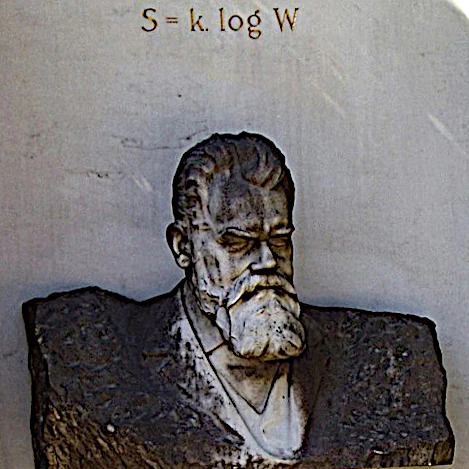

Image description: The tomb of Ludwig Eduard Boltzmann (1844-1906) at the Zentralfriedhof, Vienna, with his bust and his entropy formula.

The Entropy Equation was developed by the Austrian physicist Ludwig Boltzmann. Entropy (S) is a powerful concept that measures the disorder or uncertainty of a physical system.

Boltzmann's entropy equation is essential for understanding the relationship between entropy and the thermodynamic properties of a system, such as temperature, pressure, and energy. It plays a crucial role in the study of thermodynamic equilibrium processes, the evolution of physical systems, and the statistical interpretation of thermodynamic laws.

Boltzmann's entropy equation is generally expressed as follows: S = k log(W)

This equation shows that entropy is proportional to the logarithm of the number of possible microstates of the system.

A system is said to have high entropy if it can exist in a large number of disordered microstates (more disorder), while a system with few accessible microstates will have lower entropy (more order).

The logarithm transforms large values into smaller ones, making certain calculations in physics and mathematics more manageable. If we used ���� directly in Boltzmann's equation, we would obtain enormous and difficult-to-interpret entropy values. The logarithm serves to make these values more manageable and to respect essential mathematical properties, such as the additivity of entropy. In summary, even if a logarithm is "much smaller," it gives us a more practical scale for reasoning about systems where the number of microstates is gigantic. It's a bit like measuring astronomical distances in light-years instead of kilometers!

The Boltzmann equation did not directly lead to the discovery of the atom, but it played a crucial role in the theoretical understanding of the atomic nature of matter and in the development of statistical physics, which confirmed the existence of atoms.

S = klog(W) connects microscopic behavior (movement of atoms) to macroscopic properties (pressure, temperature, entropy).

Entropy is a fundamental concept used in various fields of science, including thermodynamics, statistical mechanics, information theory, computer science, complexity science, and others.

The definition of entropy may vary slightly depending on the context, but it shares common ideas across these fields.

In all cases, entropy is a measure of the amount of information, disorder, diversity, or uncertainty in a system.

Yet, in the universe, we observe increasingly ordered structures forming from initially less organized processes. This seems to contradict the intuitive idea that entropy, as a measure of disorder, must always increase, as suggested by the second principle of thermodynamics.

The increase in local order (such as the formation of galaxies and stars) does not imply a violation of the second principle of thermodynamics. This principle applies to the entire system and states that the total entropy of an isolated system, the Universe, cannot decrease over time.

When we observe the formation of galaxies and stars, we must consider the entire system, including the energy and processes involved on a large scale. Thus, what may seem like a decrease in disorder on a small scale is actually a simple redistribution of energy and entropy on a larger scale.

The structures that emerge in the Universe are not anomalies but manifestations of fundamental physical processes, where local organization is always accompanied by energy dissipation elsewhere.

Energy dissipation primarily occurs in the form of thermal radiation. When an ordered structure forms locally, such as a star or galaxy, the global system increases its entropy, for example:

In short, the Universe balances the creation of ordered structures by redistributing energy in the form of radiation and other dissipative processes, ensuring that the second principle of thermodynamics is always respected. Far from being an illusion, this apparent order is a natural facet of cosmic chaos.