The concept of "Self-Consuming Generative Models Go Mad" (self-consuming generative models going mad) refers, in the field of artificial intelligence, to the production of training data by the AI itself.

Generative models are algorithms that learn to generate new data by "imitating" a training dataset produced by humans. Producing training data is costly and time-consuming. Data must be collected, cleaned, annotated, and formatted so that it can be used correctly by the AI.

Scientists could not resist the temptation to use synthetic data generated by generative models themselves to train new models more quickly.

The central idea is to create a generative model capable of producing its own training data. This process is then iterated, with the model becoming increasingly capable of generating complex and novel data.

The imagined advantages are numerous. First, the model is not limited by the initial amount of data. It can explore unknown domains and discover new concepts. Thanks to its self-supervised learning, it could iteratively improve its performance. For example, it could generate novel molecular structures as candidates for new drugs.

However, there is a huge challenge associated with this approach.

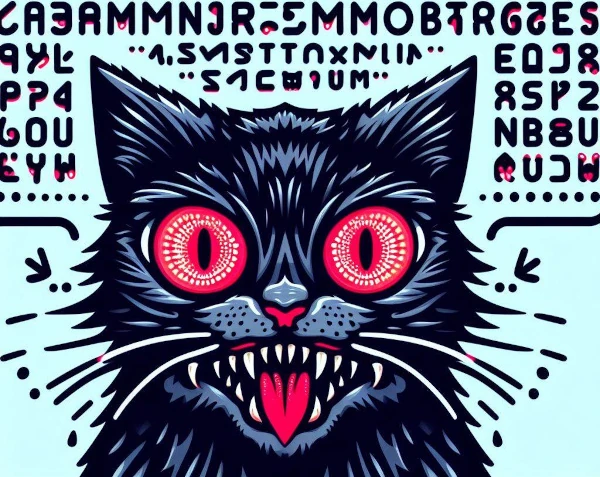

Self-Consuming Generative Models Go Mad is a phenomenon where generative AI models train on synthetic data produced by other models, creating self-consuming loops. When an AI tries to learn content generated by another AI, it goes mad.

Repeating this process creates a self-consuming loop where the training data becomes chaotic. Without fresh real data, future generative models are doomed to failure.

This autophagy process leads to a gradual decrease in quality and a dilution of diversity in the generated content. The model then produces incoherent and redundant outputs.

If the model is not exposed to a sufficient variety of examples, it fails to learn significant patterns and generates repetitive outputs.

By focusing only on its own production, it moves away from reality and generates aberrant results.

Finally, it suffers from overfitting: it memorizes insignificant details and loses its ability to generalize. It then reproduces its own biases infinitely.

In some scenarios, generative models can become "mad" or malfunction in unexpected, even self-destructive ways. For example, a model might prioritize novelty to the point of exploring increasingly unstable territories.

The lack of regulation exposes the model to runaway behavior, where content becomes extreme, offensive, or shocking. We then risk no longer understanding the results generated by the model.

This speculative notion highlights the concerns associated with the use of autonomous or poorly controlled AI models. It is an important reflection on how to design and regulate these technologies responsibly.

In summary, when AI models train on their own data, they isolate themselves from the real world and its values. Like inbreeding in nature, where reproduction between genetically close individuals leads to a depletion of the gene pool and the accumulation of defects, this cognitive closure causes intellectual impoverishment and progressive drift: AIs go mad!