Image: Generative AIs (GPT-3, Copilot, Gemini, Gopher, Chinchilla, PaLM, Human, etc.) train on very large data sets, which can contain billions of texts, images, music or videos.

These deep neural networks are composed of many parameters. For example, OpenAI's GPT-3 model contains 175 billion parameters.

How can the blind increase of these parameters give rise to a form of intelligence?

There is still something deeply disturbing in this cognitive manifestation because it tells us that intelligence can emerge automatically!

* In the short scale used in English-speaking countries, 1 billion is equal to 109 or a thousand million or 1 billion.

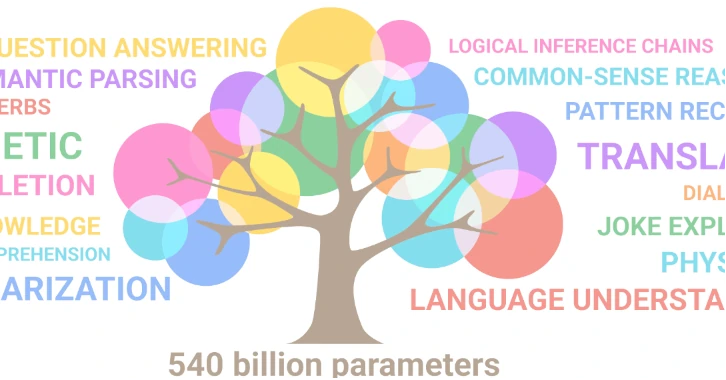

Image posted by Sharan Narang and Aakanksha Chowdhery, software engineers, Google Research.

Neural network parameters are the internal variables of an AI model. These parameters are automatically adjusted during learning from input data.

For example, OpenAI's GPT-3 model has 175 billion parameters. OpenAI's DALL-E model has 12 billion parameters. Google's Gemini Ultra model is said to have 540 billion parameters.

The number of parameters depends on the structure of the network, that is to say the number of layers, the number of neurons per layer, and the type of connection between the layers.

The number of parameters is given by the following formula: P = (d+1)h + (h+1)o where d is the number of input neurons , h is the number of hidden neurons, and o is the number of output neurons. The term +1 corresponds to bias, which is an additional parameter added to each layer to avoid the natural human tendency to favor certain outcomes.

The number of parameters influences the learning capacity of a neural network and therefore the performance and behavior of the model. The more parameters there are, the more the system has the capacity to produce correct and consistent results. However there is a limit!

A phenomenon called “overfitting” penalizes the system when the number of parameters is too large compared to the number of data available.

If we want to increase the number of parameters in a neural network, we must also increase the training data.

This explains why operators' appetite for our data is insatiable.

In artificial neural networks (ANN), each neuron performs a calculation that is a weighted sum of its inputs, then applies a thresholded activation function to determine its output which it provides to the next layer.

From this simple mathematical process, a singularity appears.

The network only predicts the next word or token that will follow in the sentence. And yet, an ordered, rational and coherent sentence emerges even though it is the result of a probabilistic process.

For this magician of language who juggles with words without worrying about their meaning, the notion of truth is irrelevant. The system does not seek to provide correct answers, but rather probable sentences.

In other words, a system which has no connection with our reality, devoid of meaning and knowledge and which does not distinguish "true" from "false", can provide an "intelligent" response.

It is thanks to its immense learning corpus that the AI gives the impression of understanding the context of the sentence, the author's intention and the nuances of the language.

There is something deeply disturbing about this manifestation!!

How can a phenomenon as complex and sophisticated as intelligence appear in a virtual environment?

Examples of emerging concepts:

- Just after the Big Bang, the universe is extremely hot and dense. In this extreme environment, from pure energy emerges matter, in accordance with Einstein's equation, E=mc². Thus, elementary particles such as quarks, electrons, neutrinos, which did not exist before, emerge from the early universe.

- Life is an emergent phenomenon, it results from the interaction of simpler components, such as the chemical molecules that constitute it. However, it presents new and irreducible properties to these components. From a certain molecular organization it appears in an environment where it did not exist before.

An emergent concept arises from a more fundamental concept while remaining new and irreducible to it. In other words, new properties appear with the concept emerging from an environment where it was not previously present. These new properties seem to be a natural response to the specific physical conditions of an environment.

IA models before 2017 were trained on much smaller datasets than those used today. They were far from perfect, generative AIs didn't work very well.

As the data available for learning increases, data scientists have intuitively increased the number of parameters. From a miraculous threshold, they noted the appearance of a significant improvement in results.

This phenomenon occurred in 2017 with the GPT-2 (Generative Pre-trained Transformer 2) model which marked a turning point in the field of text generation, by demonstrating its ability to produce human-quality texts.

What happened ?

Before 2017, the scale of models (learning data and neural architectures) increased but nothing happened, performance was poor and they stagnated. Then suddenly when the scale reached a threshold, there was a phase transition. In other words, a change in the physical state of the system, caused by the diversity of data and parameters.

Suddenly, richer, deeper, more complex interactions between neurons appeared.

The remarkable thing about this miraculous evolution is the emergence of more sophisticated cognitive abilities that now seem "intelligent" to us.

Scientists have a hard time explaining this phase transition. However, an “intelligence” does indeed emerge mathematically from the interaction of very simple components, such as data, algorithms, models and parameters!!

What does this emergence from a machine tell us about the nature of intelligence itself?

Machine learning is a non-linear process, meaning that small changes can lead to large changes in the model's behavior. At the moment, we don't understand how the models make their decisions and this makes it difficult to predict their future behaviors.

The field of AI is evolving very quickly, with new technologies and architectures emerging all the time. From the increasing complexity of models, other unexpected properties could emerge, such as creativity, art, understanding of reality and even consciousness.

AI was originally designed to mimic the capabilities of the human brain. To do this, she was inspired by biological neuron models to create artificial neural networks.

It is likely that in the future, AI and brain research will enrich each other.

By using AI and letting it evolve on its own, it is possible that it will provide us with the keys to unlocking the mysteries of the human brain.

“Chance is the god of inventors.” - Pierre Dac (1893-1975) French humorist.