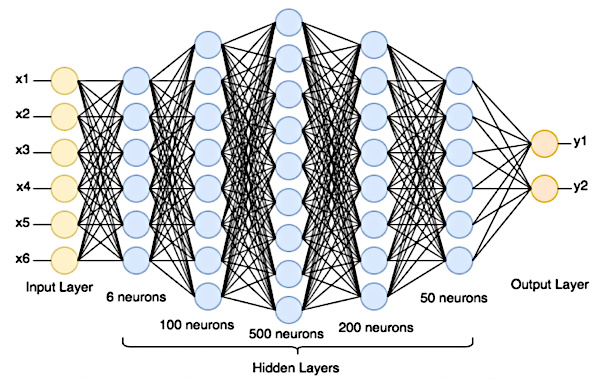

Image: An artificial neural network is made up of a succession of layers of interconnected neurons, each of which takes its inputs from the outputs of the previous layer. In an artificial neural network, the number of neurons per layer can be tens of thousands and the number of layers can reach several hundred. Image credit: public domain.

To understand how artificial neural networks work, it is necessary to understand the meaning of artificial intelligence (AI). AI consists of creating computer systems capable of automating tasks without human intervention, but also of learning, adapting, improving, communicating and above all making decisions. In this sense, AI seeks to reproduce aspects of human intelligence.

The definition of intelligence is very debatable, but the meaning that will interest us here is that of functions controlled by the brain. Indeed, whether they are mechanical like walking or cognitive like decision-making, the architecture of functions remains the same in all the cerebral areas of our brain. Furthermore, all functions are of equal importance, without distinction between those considered noble or less noble. They are all carried out with the same complexity of calculations, through an enormous network of billions of neurons interconnected between them to exchange information. Information is exchanged through electrical and chemical signals.

In the human brain, the process of communication between neurons is as follows:

- When the electrical signals, initiated at the cell membrane of the neuron, reach a critical threshold, they trigger a brief electrical impulse called an "action potential". Action potentials travel along the axon to the neuron's synapse.

- At the level of the synapse (contact points between neurons) action potentials trigger the release of chemical molecules, called "neurotransmitters".

- Neurotransmitters then bind to receptors located on the membrane of the postsynaptic neuron (the receptor neuron). This chemical bond triggers an electrical response in the postsynaptic neuron.

- The postsynaptic neuron integrates all the inputs it receives from the transmitter neurons, and if the critical threshold is reached, it in turn generates an action potential which propagates along its own axon, thus continuing the transmission of the information in the network.

Depending on the types of neurotransmitters released, presynaptic activity can have an excitatory or inhibitory effect on the electrical activity of the postsynaptic neuron. This makes the firing of an action potential more or less likely.

This complex process is the basis of brain function and human cognition. It is from this process that researchers will develop an artificial intelligence model, very simple at first and then increasingly sophisticated as technological progress progresses.

The artificial neuron is the basic unit of an artificial neural network. An artificial neural network is made up of a succession of layers of interconnected neurons, each of which takes its inputs from the outputs of the previous layer.

Artificial neurons are not computer bits (0 or 1), but rather mathematical abstractions (numbers, operations, functions, equations, matrices, set, probability, etc.). In other words, they are processing units that perform mathematical operations on the data presented to them. They do not store data while computer bits are the basis for storing digital information.

In an artificial neural network, each neuron is characterized by a level of activity captured by a variable called the "activation potential". The synapse of the neuron is characterized by another variable called the “synaptic weight”.

- The activation potential represents the electrical state of the postsynaptic neuron at a given moment. It is calculated by summing the input signals from presynaptic neurons, with each signal modulated by the corresponding synaptic weight. The activation potential can vary continuously in a range from negative to positive values, depending on the intensity of the incoming signals.

- Synaptic weights determine whether a synaptic connection is excitatory, inhibitory or null. The weights modulate the impact of input signals on the activation potential. Positive weights increase activity, negative weights reduce it, and zero weights have no effect.

The activation potential results from the combination of input signals weighted by the synaptic weights. This potential is then subjected to an activation function, which introduces non-linearity and determines whether the postsynaptic neuron generates a response (action potential) or not. Ultimately, these mechanisms allow the neuron to process information and respond to stimuli in an adaptive manner. The functioning of these variables is fundamental for modeling the behavior of neurons, both in biological neural networks and in artificial neural networks.

Let's imagine an artificial neural network used for image classification. This network includes a postsynaptic neuron which receives connections from three presynaptic neurons. Each of these three presynaptic neurons is associated with a specific feature of the image that the network analyzes, for example, the presence of vertical lines, horizontal lines and curves.

The postsynaptic neuron has an initial firing potential of 0.

When the three presynaptic neurons send their signals, each signal is multiplied by the synaptic weight associated with the corresponding connection. Suppose the synaptic weights are:

- Synaptic weight for the vertical lines characteristic: +0.5

- Synaptic weight for the characteristic of horizontal lines: -0.3

- Synaptic weight for the characteristic of the curves: +0.2

The signals from the three presynaptic neurons are weighted by the respective synaptic weights and summed.

If we have the following signals:

- Signal for vertical lines: 1

- Signal for horizontal lines: 0.5

- Signal for curves: 0.8

The activation potential would be calculated as follows:

Activation potential = (1 * 0.5) + (0.5 * (-0.3)) + (0.8 * 0.2) = 0.5 - 0.15 + 0.16 = 0.51

If the activation potential exceeds a defined threshold (e.g. 0), the postsynaptic neuron will generate an action potential, indicating that the desired feature has been detected in the image.

In this example, synaptic weights play a crucial role in determining the relative importance of each image feature. The input signals weighted by the synaptic weights are used to calculate the activation potential, which, if it exceeds the threshold, will trigger the response of the postsynaptic neuron. This allows the neural network to make decisions based on features detected in the image.

The hardware infrastructure of an artificial neural network is not biological, it is the same as that of classical computing (microprocessors, graphics cards, etc.).

Thesoftwareinfrastructure of an artificial neural network is different. Machine learning algorithms learn from data and adjust their behavior based on the examples provided, whereas traditional programming algorithms are based on static explicit instructions that do not change on their own. It is in this sense that AI is a revolution because to statically write the rules of ChatGPT 3.5 with its 175 billion parameters, it would have taken thousands of years.

A neural network is organized in layers where each artificial neuron (mathematical function) receives inputs, performs calculations on these inputs and generates an output. The first layer is the input layer, which receives the raw data (text, digital image or other collected data). Behind it, there are one or more hidden layers (not accessible from the outside), followed by the output layer which produces the predictions.

To make a prediction, data is propagated from the input layer to the output layer. Each neuron sums its weighted inputs, applies an activation function, and passes the result to the next layer.

Activation functions introduce nonlinearities into the network. This means that the relationship between quantities is not a constant proportion but a probability. This is what gives neural networks their ability to solve a variety of problems, from image recognition to machine translation to natural language modeling.

After making a prediction, the network compares its own results to the correct labels to measure the error or difference between the two. Correct labels are an essential component of the training set of a supervised learning model. They are provided for each example in the training set to allow the model to learn to make accurate predictions.

In the next step, the back propagation algorithm adjusts the network weights (internal parameters that determine how neurons react to inputs). This allows it to find the values that minimize the error of the model. This process repeats until the network reaches a satisfactory performance level.

In the network, there are also hyper parameters to tune, such as learning rate (number of iterations the model does when updating weights during training), batch size used (how many of data examples are used each time the weights are updated), the network architecture (number of layers and number of neurons per layer), the choice of the activation function in the layers, etc.

After evaluating the model for each combination, researchers choose the hyper parameters that give the best performance on the validation data.

Ultimately, the trained model is evaluated on new, never-before-seen data.

Let's assume that we have a data center capable of providing us with 100,000 different images of 28x28 pixels in grayscale representing handwritten numbers from 0 to 9.

Our neural network will have an input layer configured to the size of the images (28x28 neurons), one or more hidden layers, and an output layer with 10 neurons (because there are 10 possible numbers: 0 to 9). Each neuron in the output layer represents the probability that the image matches a particular digit.

The weights of the connections between neurons are initially set to random values.

The raw data, for example the digital image of 3, is introduced into the input layer.

To analyze local regions of the image, convolution filters (e.g. 5x5 pixels) slide over the image, pixel by pixel, to extract a hierarchical visual feature map. Typically, a convolution layer will use several different filters. Each filter learns to detect specific features of the image, such as edges, textures, patterns, etc. The features extracted by the first convolution layers are simple (like edges), while the higher layers learn to recognize more complex features (like patterns or objects).

Data propagates through the network by following weighted connections and applying activation functions. At each layer, mathematical operations are performed to obtain an output.

The output layer produces scores for each digit (0-9). A function will transform these scores into a series of probabilities for each possible class (0-9). The number with the highest probability will be the network's prediction.

Then the network will compare its prediction with the actual label of the image (for example, "it's a 3").

A cost function measures the divergence between the model predictions and the actual labels. It assigns a higher cost when the model predictions are far from the expected labels. When training a neural network, the objective is to minimize the cost function.

To do this, the error is propagated in the opposite direction through the network. This back propagation goes from the output layers to the input layers. As the error propagates in the reverse direction, the network adjusts its parameters (weights and biases) in each layer to minimize the error. This is done using optimization algorithms, such as gradient descent, which update model parameters. Weights and biases are adjusted according to the gradient. The parameters are changed in the direction that minimizes the cost function.

This process is repeated on a very large number of training images. The network will adjust its parameters at each iteration to improve in classifying handwritten digits.

Once the network is trained, it is tested on a separate dataset (images not yet seen) to evaluate its performance in terms of classification accuracy.

The concept of changing weights is used in artificial neural networks because it is inspired by the functioning of the human brain. In the human brain, neurons are connected to each other by synapses. The strength of the connection between two neurons is called "synaptic weight". Synaptic weights are changed during the human learning process. This process, still poorly understood, is called synaptic plasticity. Synaptic plasticity is a fascinating process that allows us to adapt and learn throughout life.

For example :

• If we learn the word "chien" in French, the neurons that represent the "ch" sound, the "i" sound, the "e" sound and the word "chien" are activated together. The more we use the word "dog", the more these neurons are activated together.

• The more traumatized people expose themselves to positive and safe experiences, the more likely the synapses between the neurons that represent the traumatic event are to weaken.